Web 3.0 is the next step in the evolution of the Internet and Web applications.

Context

Web 3.0 is the next step in the evolution of the Internet and Web applications.

Background

- As is well known, the internet is a telecommunications network that was initially born in the military (in the 60s, under the name of Arpanet) and then moved to the scientific and academic community.

- The World Wide Web saw the light in 1991. At this point, the internet was ready to spread with a completely unexpected speed.

- The Internet has changed dramatically since its inception. From Internet Relay Chat (IRC) to modern social media, it has become a vital part of human interactions - and continues to evolve.

- Today, the internet has created astounding pathways for opportunity and success is an understatement.

- It has democratized access to information, created boundless economic opportunities and connected people worlds apart.

- In 1990,fewer than 1% of the world’s population was online. Thirty years later, that number has jumped to 59% of the world’s ever-growing population.

- This growth has come at a price. Today’s internet looks less like its inventors’ visions of a decentralized, democratic information network and more like an oligopoly controlled largely by the companies that own the data.

- Big Tech platforms know for whom and what we search, who are our friends and family, what we like and dislike.

- These companies capitalize on our digital identities for their lucrative advertising-based business models, capturing enormous value at the expense of the privacy of their users.

- Most users accept the privacy and opportunity costs because of the convenience and value these services provide.

Analysis

A brief history of the evolution of the Internet

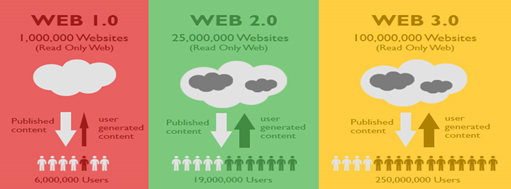

Websites and web applications have changed dramatically over the last decades. They have evolved from static sites to data-driven sites that users can interact with and change.

Web 1.0

- The original Internet was based on what is now known as Web 1.0.

- The term was coined in 1999 by author and web designer Darci DiNucci, when distinguishing between Web 1.0 and Web 2.0.

- Back in the early 1990s, websites were built using static HTML pages that only had the ability to display information – there was no way for users to change the data.

Web 2.0

- That all changed during the late 1990s when the shift toward a more interactive Internet started taking form.

- With Web 2.0, users were able to interact with websites through the use of databases, server-side processing, forms, and social media.

- This brought forth a change from a static to a more dynamic web.

- Web 2.0 brought an increased emphasis on user-generated content and interoperability between different sites and applications.

- Web 2.0 was less about observation and more about participation.

- By the mid-2000s, most websites made the transition to Web 2.0.

Understanding the ‘new internet’

- Web 3.0 is the next generation of Internet technology that heavily relies on the use of machine learning and artificial intelligence (AI).

- It aims to create more open, connected, and intelligent websites and web applications, which focus on using a machine-based understanding of data.

- Through the use of AI and advanced machine learning techniques, Web 3.0 aims to provide more personalized and relevant information at a faster rate.

- This can be achieved through the use of smarter search algorithms and development in Big Data analytics.

- Current websites typically have static information or user-driven content, such as forums and social media.

- While this allows information to be published to a broad group of people, it may not cater to a specific user’s need.

- A website should be able to tailor the information it provides to each individual user, similar to the dynamism of real-world human communication.

The 4 Properties of Web 3.0

- To understand the nuances and subtleties of Web 3.0, let’s look at the four properties of Web 3.0:

- Semantic Web

- Artificial Intelligence

- 3D Graphics

- Ubiquitous

Challenges of Web 3.0 Implementation

- Vastness: The internet is HUGE. It contains billions of page and existing technology has not yet been able to eliminate all semantically duplicated terms. Any reasoning system which can read all this data and understand its functionality will have to be able to deal with vast amounts of data.

- Vagueness: User queries are not really specific and can be extremely vague at the best of times. Fuzzy logic is used to deal with vagueness.

- Uncertainty: The internet deals with scores of uncertain values. Probabilistic reasoning techniques are generally employed to address uncertainty.

- Inconsistency: Inconsistent data can lead to logical contradiction and unpredictive analysis.

- Deceit: While AI can help in filtering data, what if all the data provided is intentionally wrong and misleading. Cryptography techniques are currently utilized to stop this problem.

What makes Web 3.0 ‘superior’ to its predecessors?

- No central point of control: Since middlemen are removed from the equation, user data will no longer be controlled by them. This reduces the risk of censorship by governments or corporations and cuts down the effectiveness of Denial-of-Service (DoS) attacks.

- Increased information interconnectivity:As more products become connected to the Internet, larger data sets provide algorithms with more information to analyze. This can help them provide more accurate information that accommodates the specific needs of the individual user.

- More efficient browsing:When using search engines, finding the best result used to be quite challenging. However, over the years, they have become better at finding semantically-relevant results based on search context and metadata. This results in a more convenient web browsing experience that can help anyone find the exact information they need with relative ease.

- Improved advertising and marketing:No one likes being bombarded with online ads. However, if the ads are relevant to one's interests and needs, they could be useful instead of being an annoyance. Web 3.0 aims to improve advertising by leveraging smarter AI systems, and by targeting specific audiences based on consumer data.

- Better customer support: When it comes to websites and web applications, customer service is key for a smooth user experience. Due to the massive costs, though, many web services that become successful are unable to scale their customer service operations accordingly. Through the use of smarter chatbots that can talk to multiple customers simultaneously, users can enjoy a superior experience when dealing with support agents.

- Trustworthy yet decentralized: Instead of relying on trusted intermediaries to coordinate users, Web 3.0 systems use mechanisms such as cryptographic proofs and economic incentives to guarantee users that the system is working as expected. As a result, Web 3.0 networks are trustworthy, yet decentralized.

Closing thoughts

These systems require collective user cooperation to succeed, these projects make it a core tenet to protect, not exploit, their users and their privacy. These Web 3.0 systems – the new internet – could upend advertising-based business models, so far one of the most successful business models of all time.

Though there is no concrete definition for Web 3.0 yet, it is already set in motion and will surely continue towards further iterations.